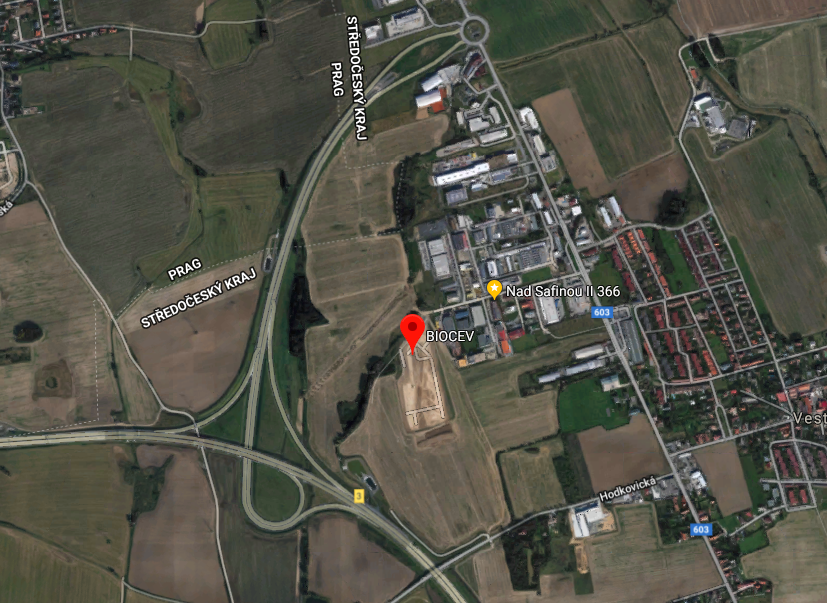

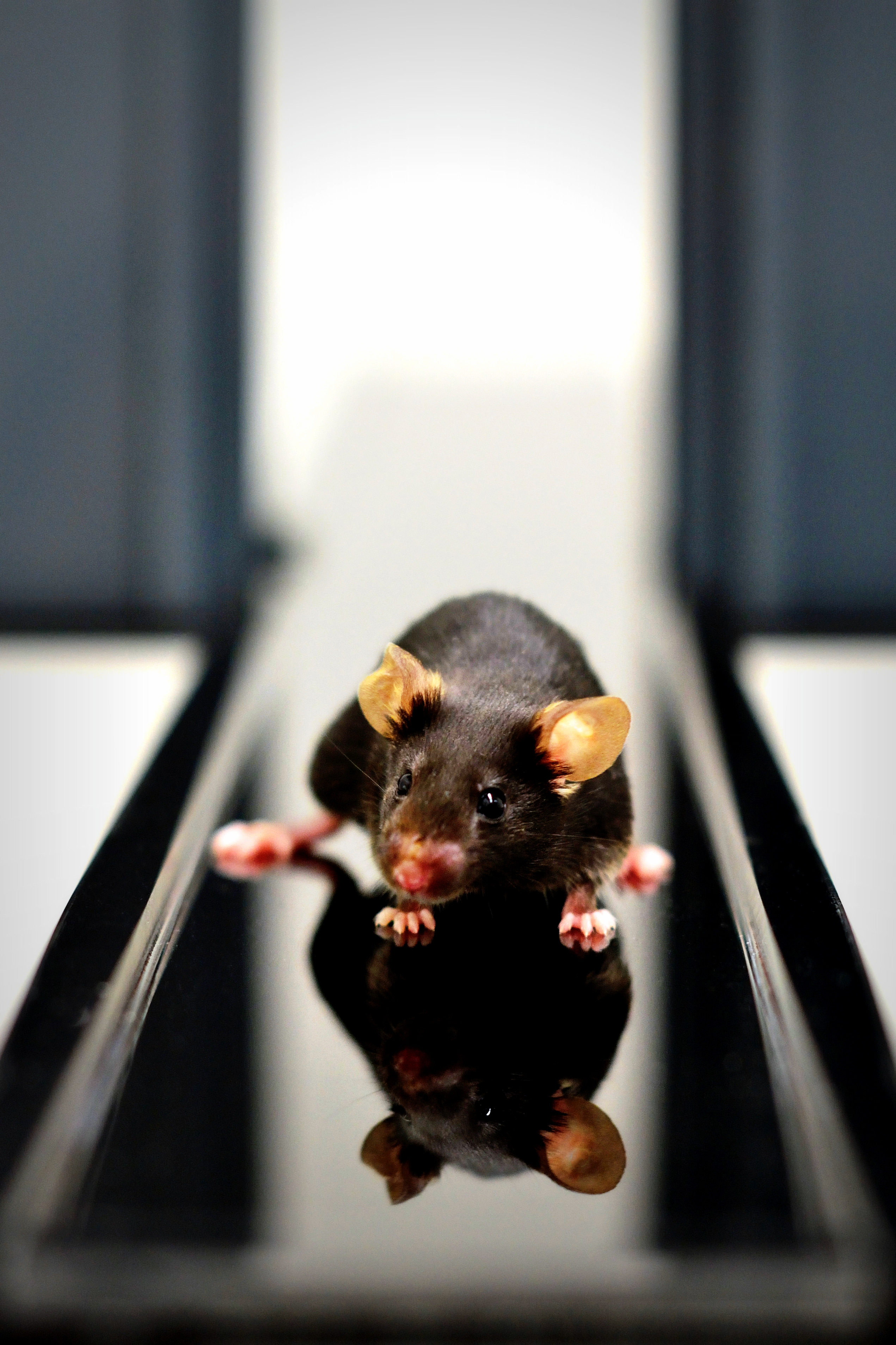

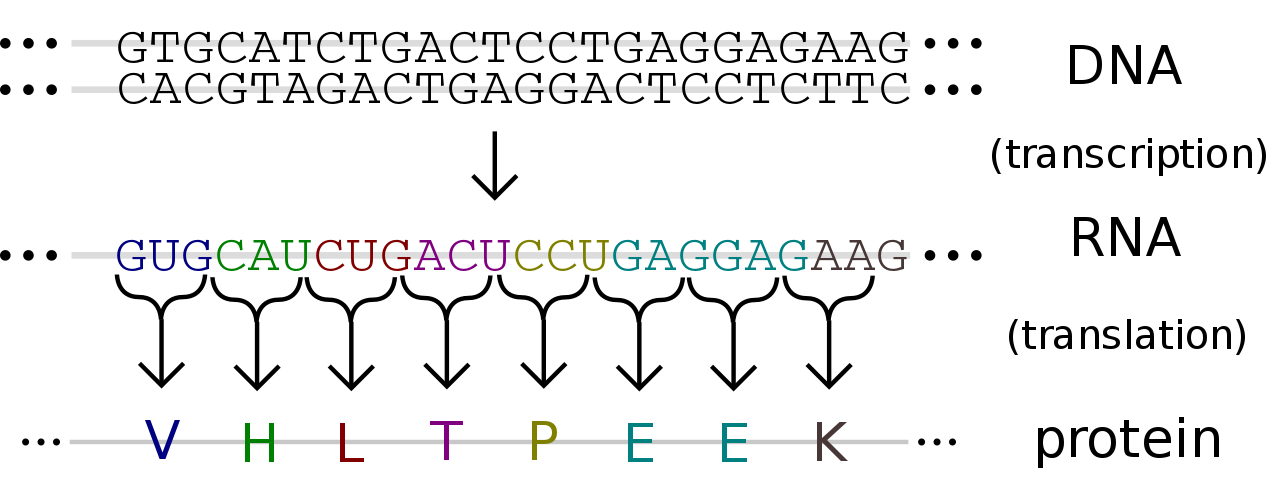

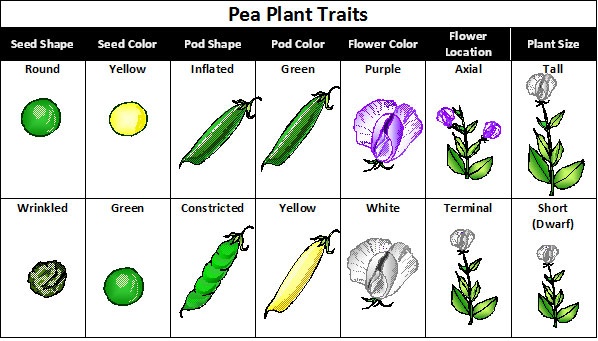

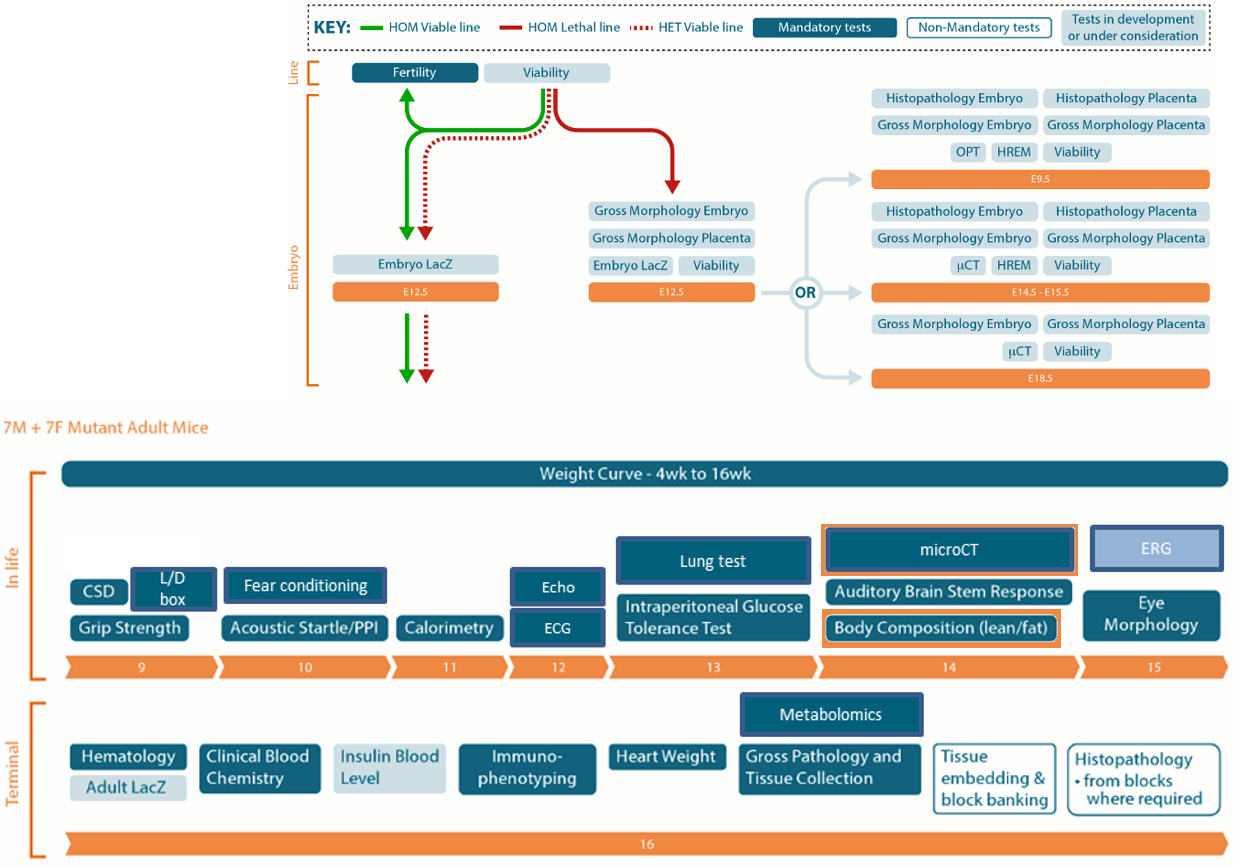

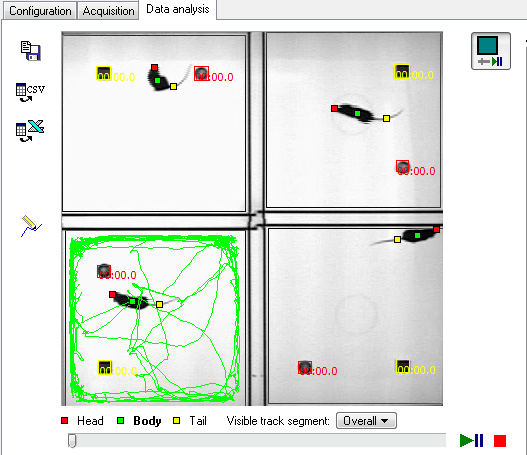

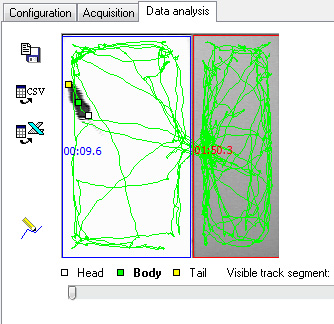

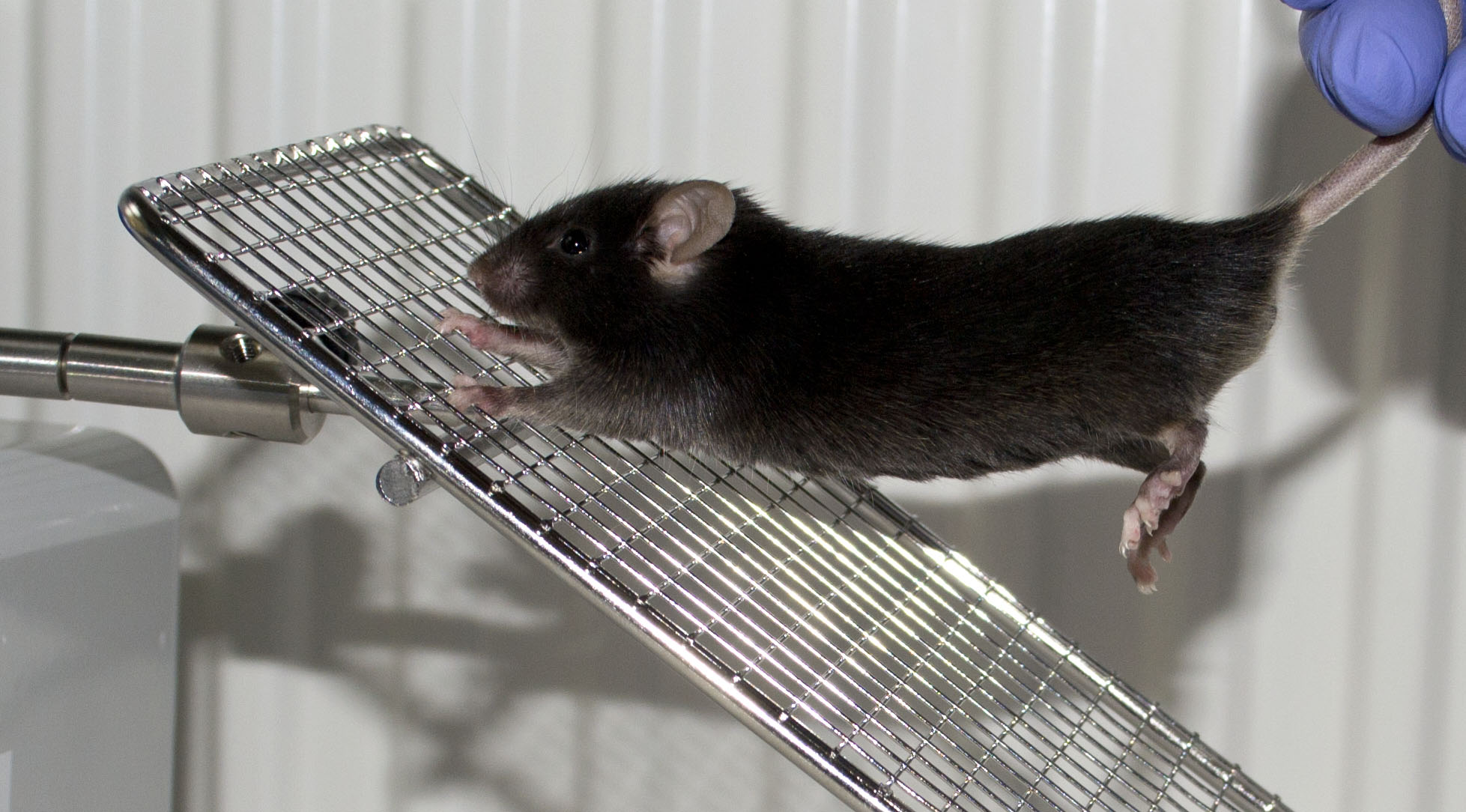

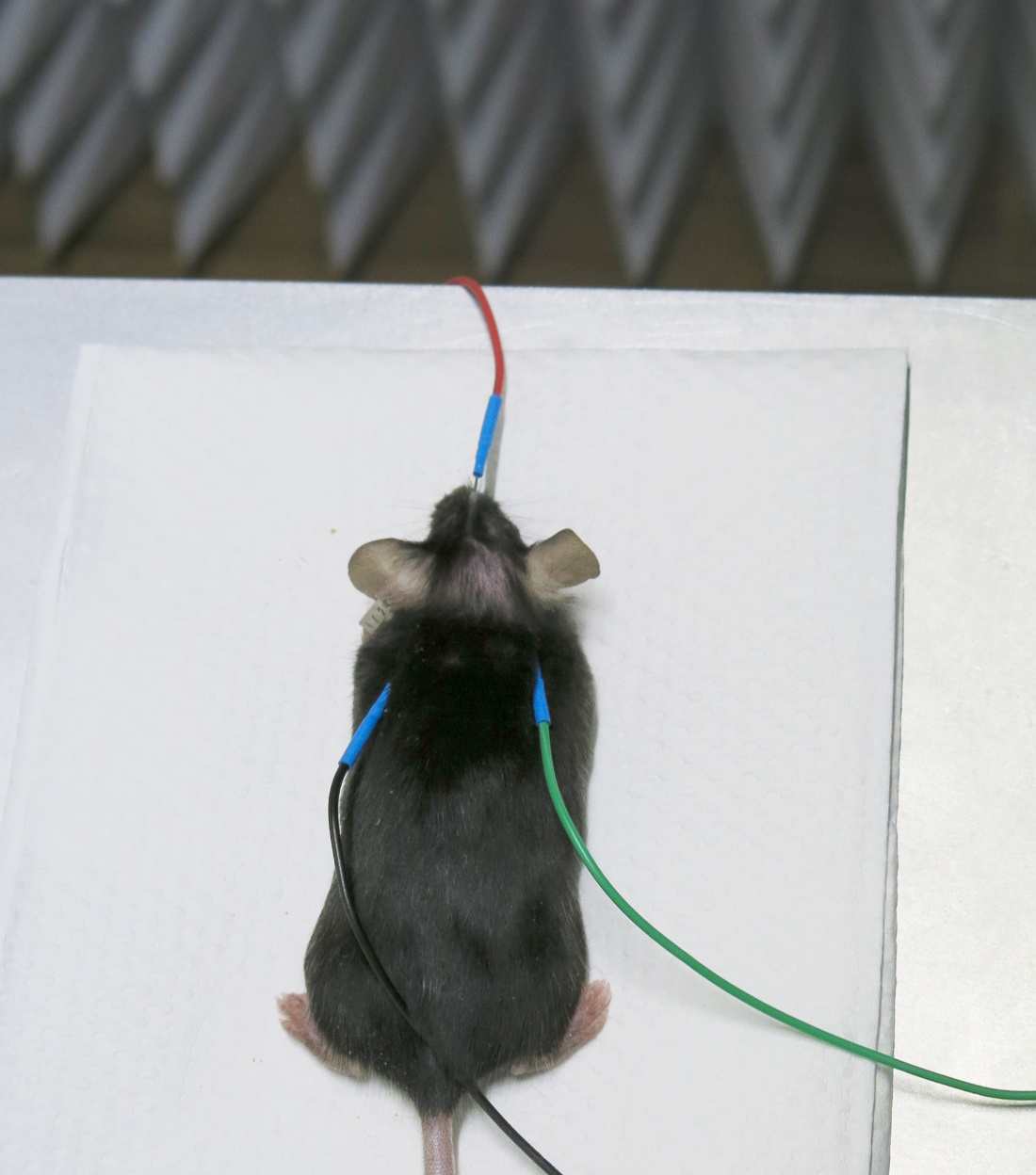

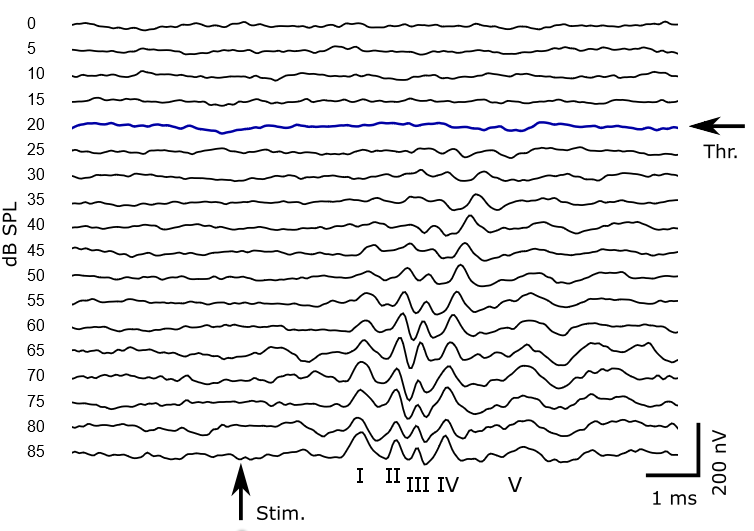

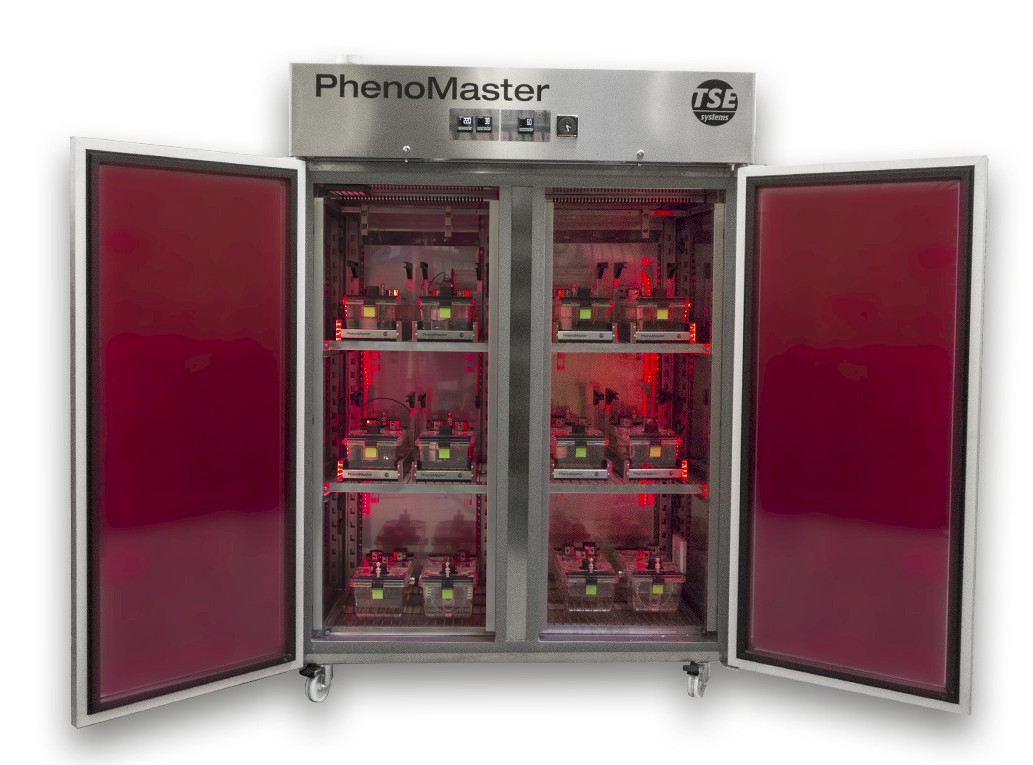

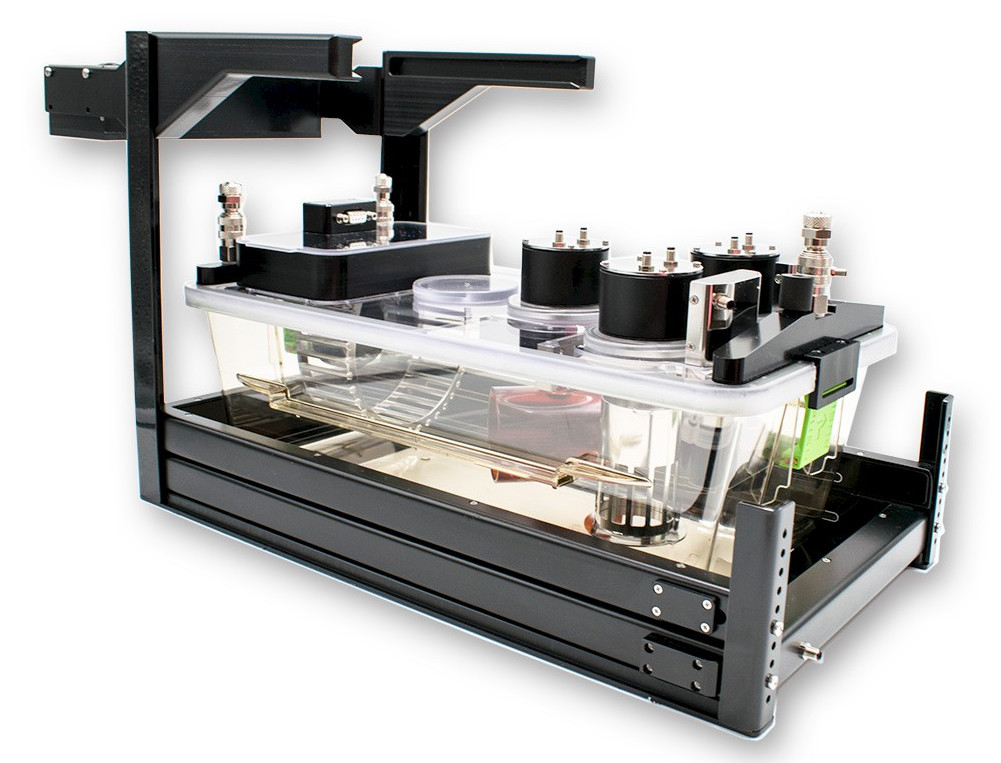

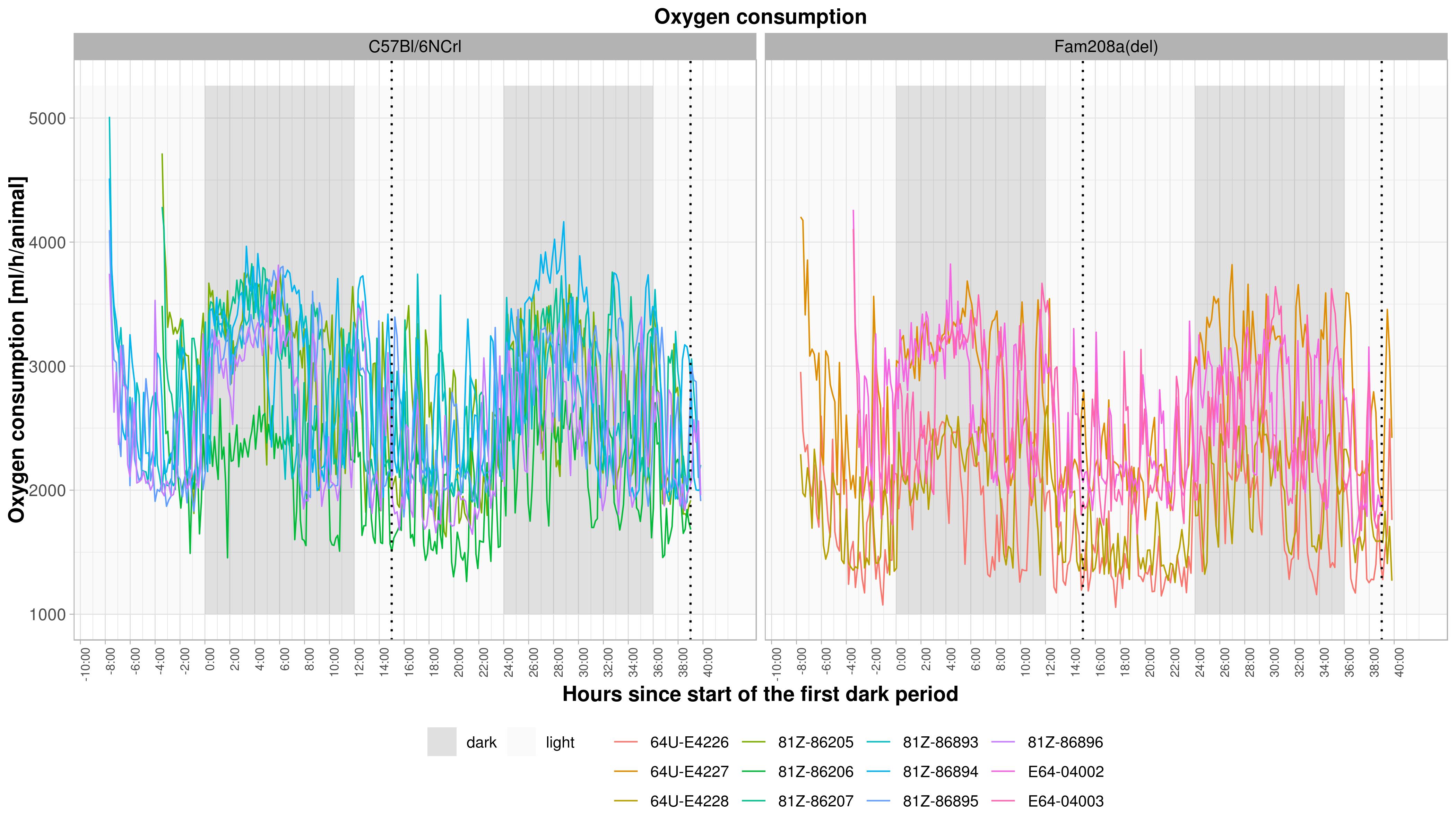

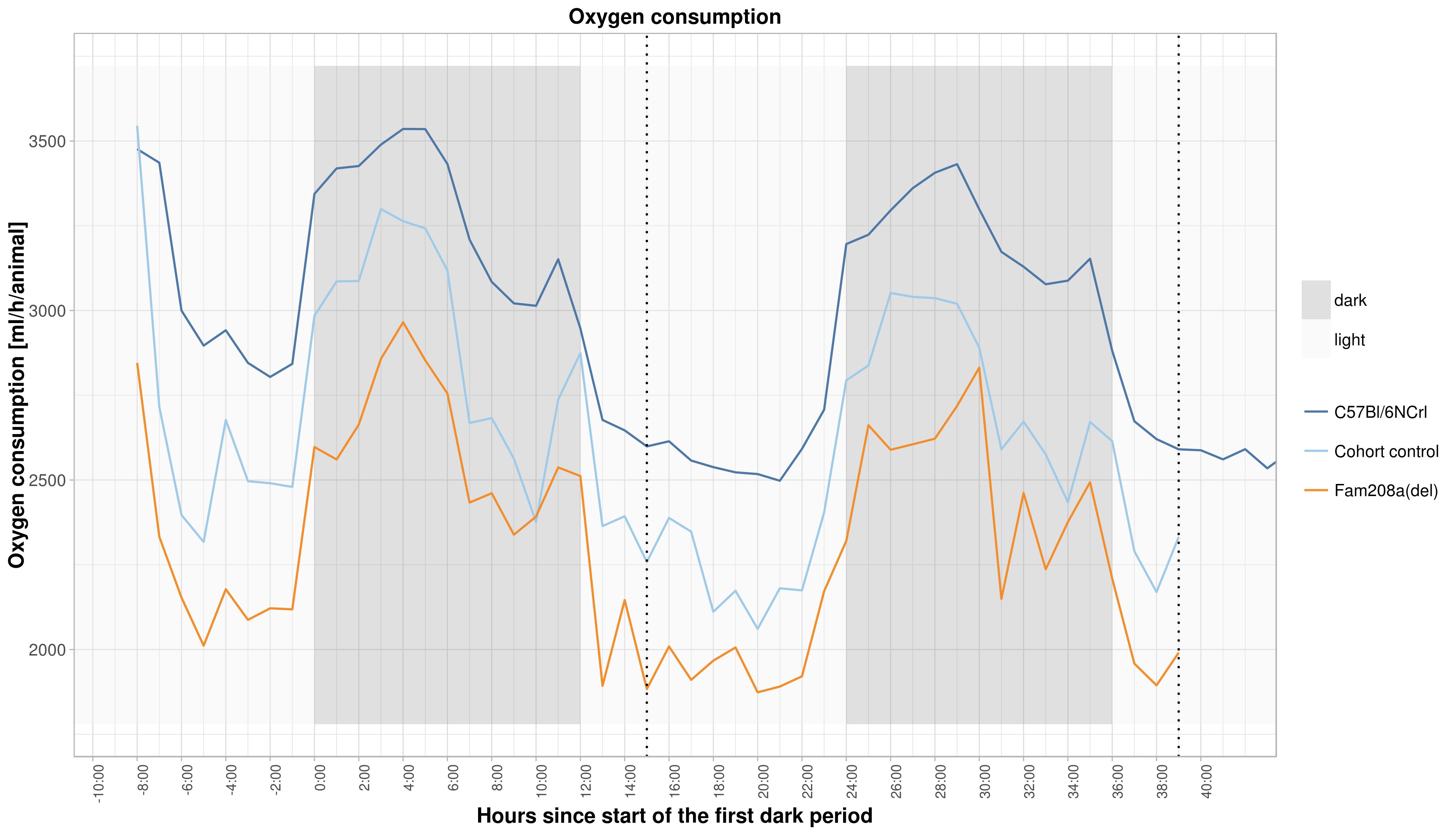

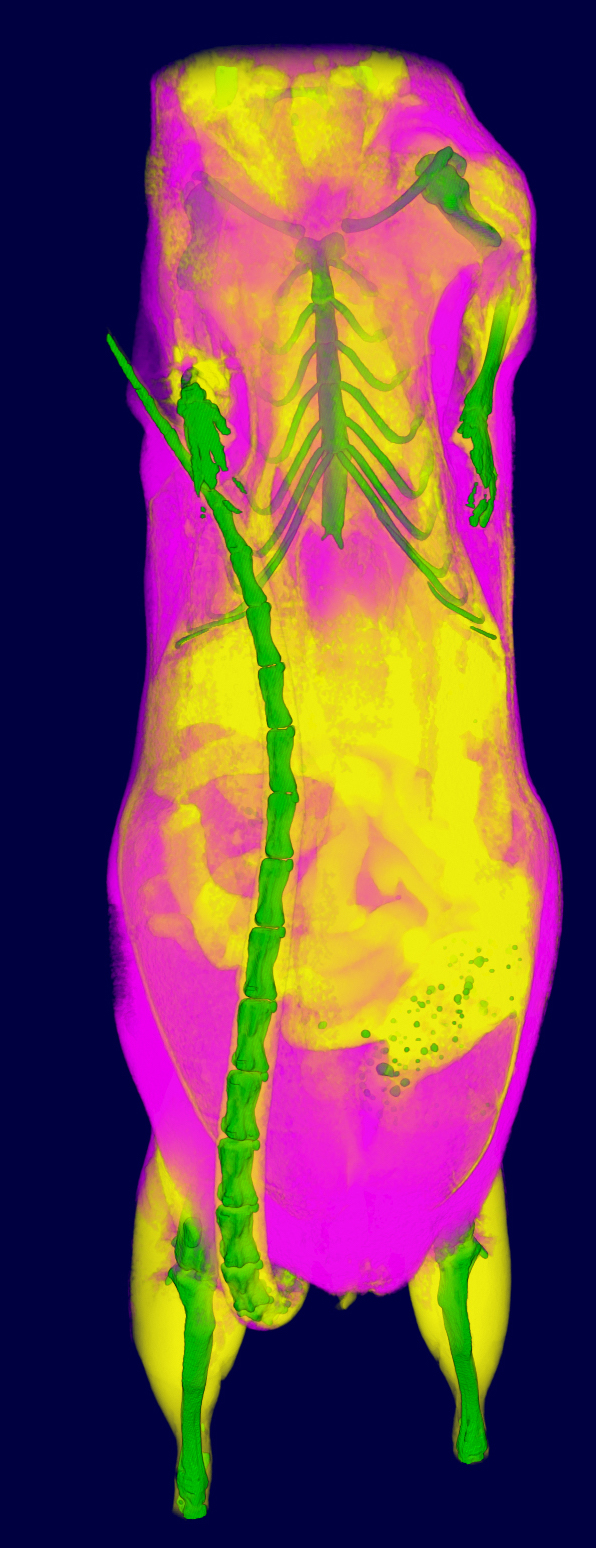

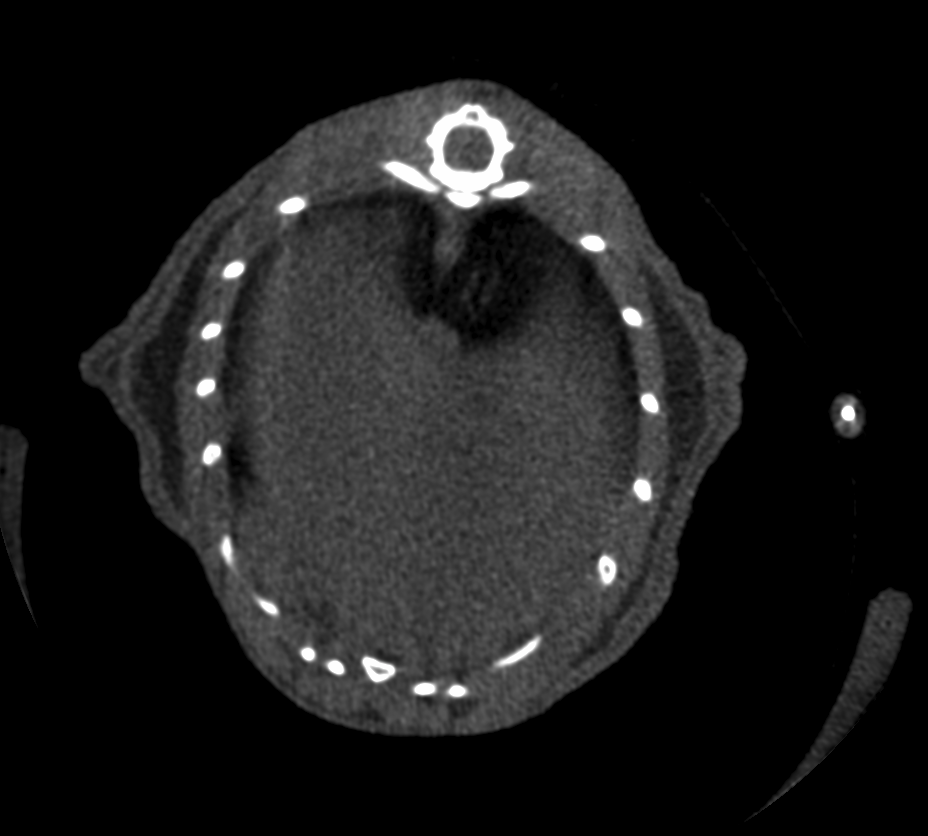

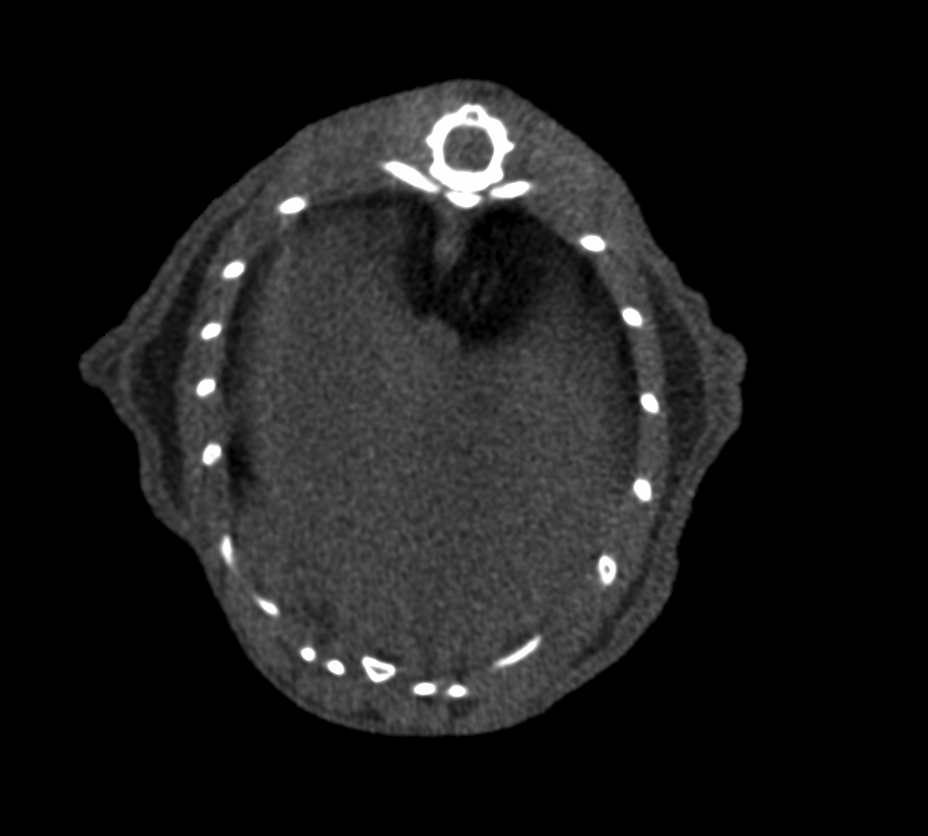

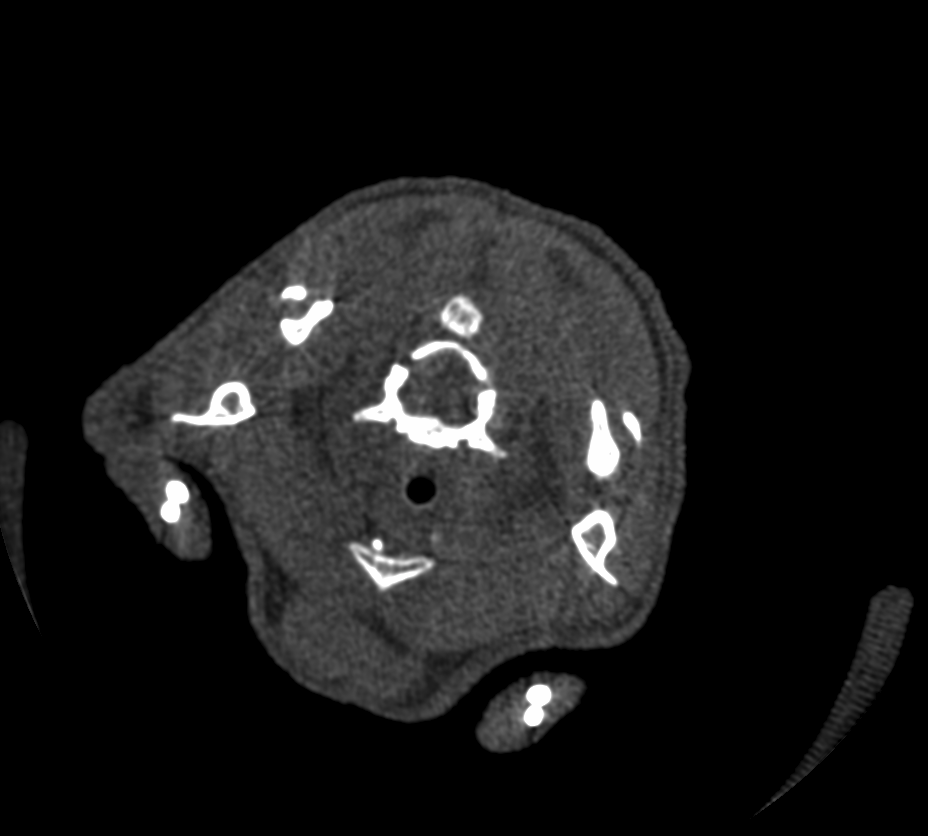

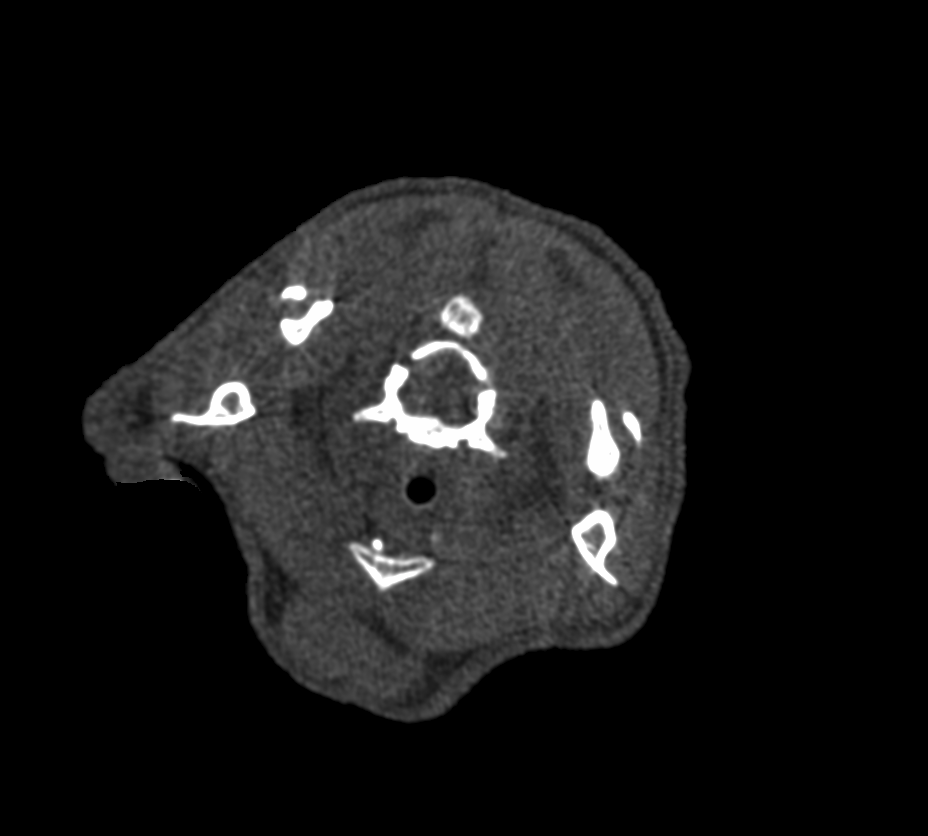

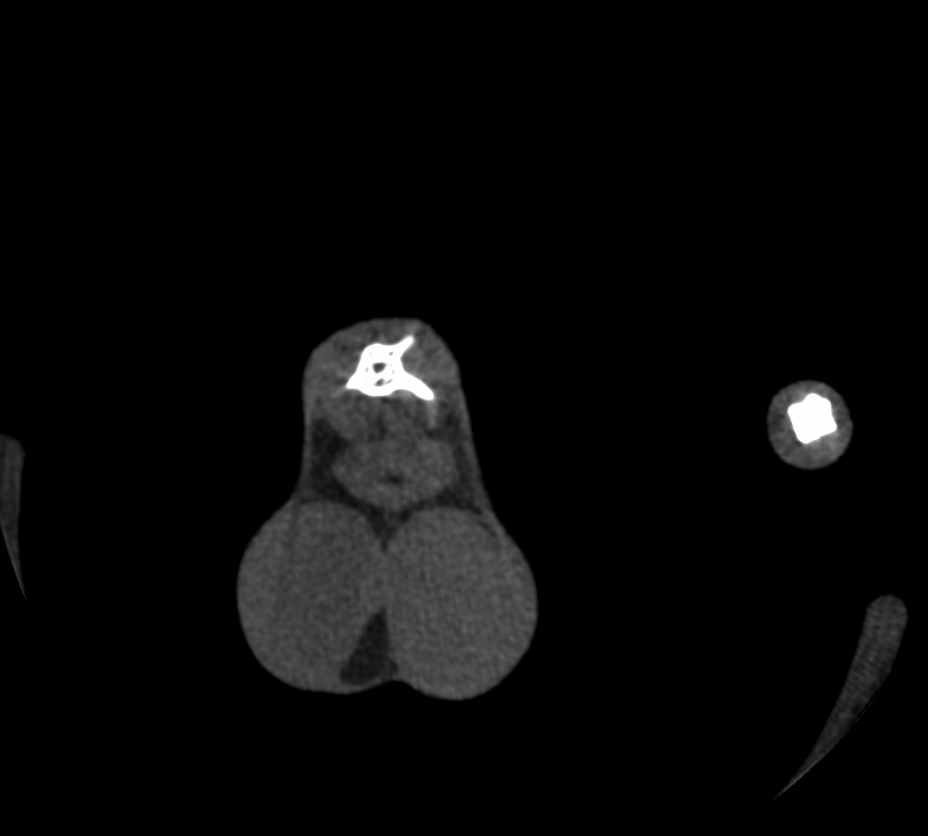

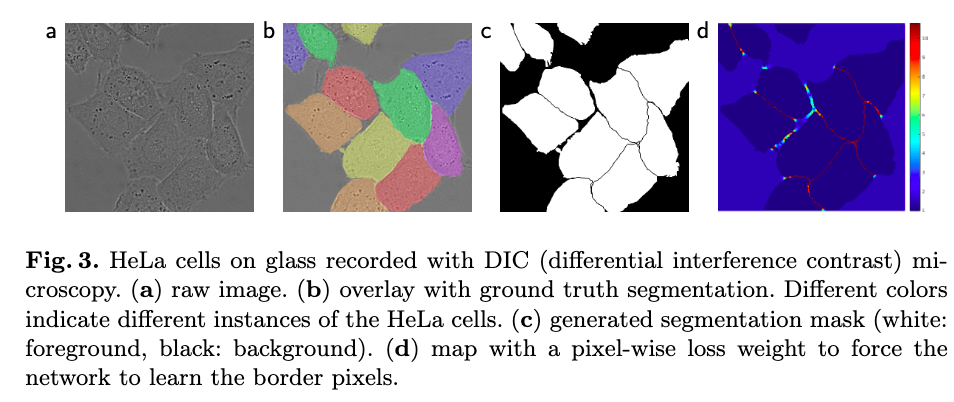

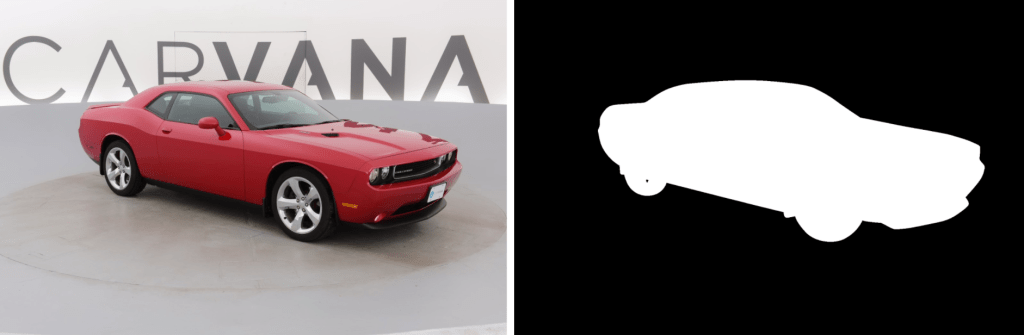

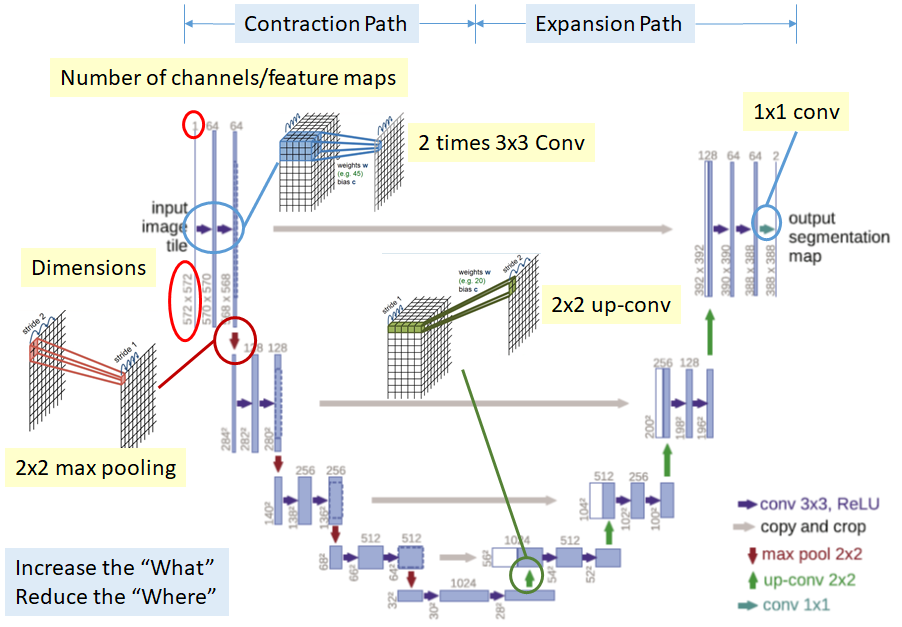

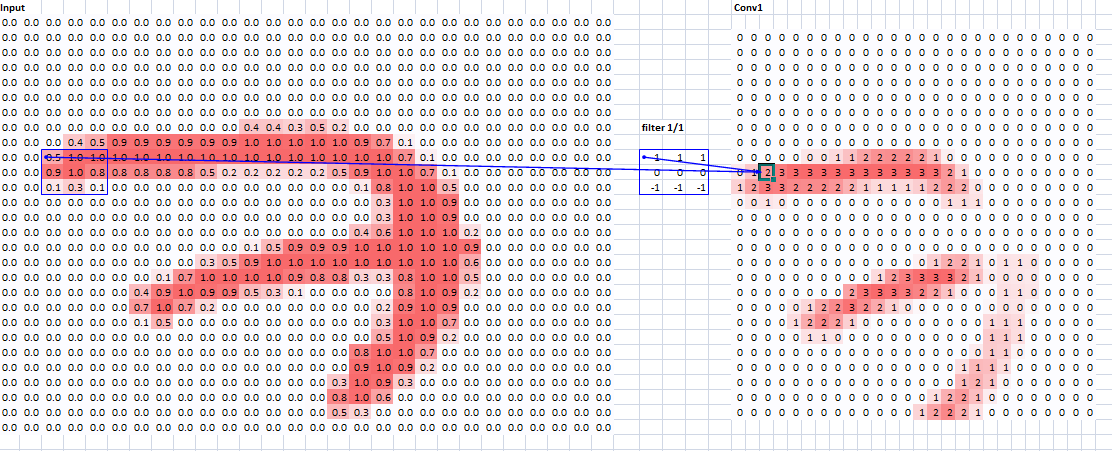

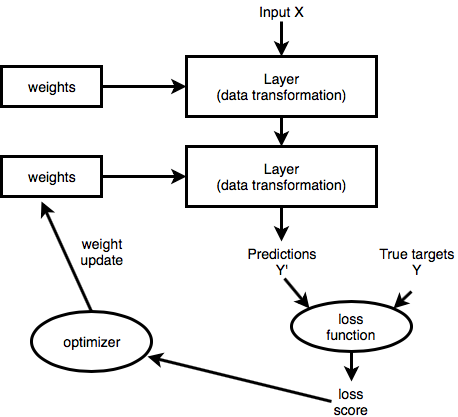

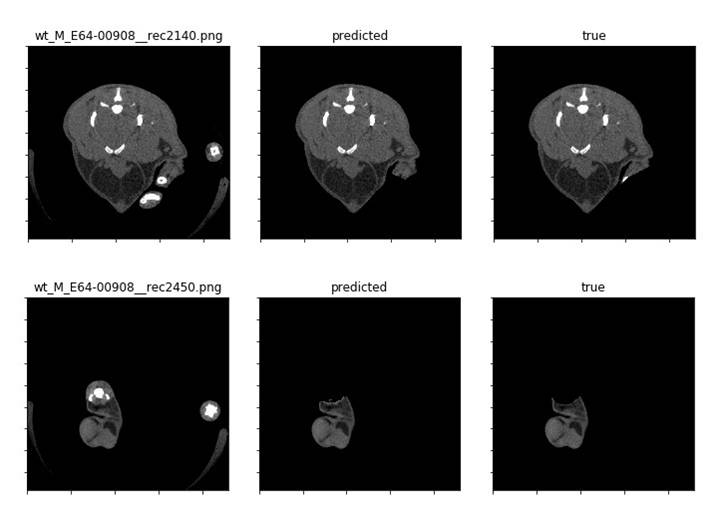

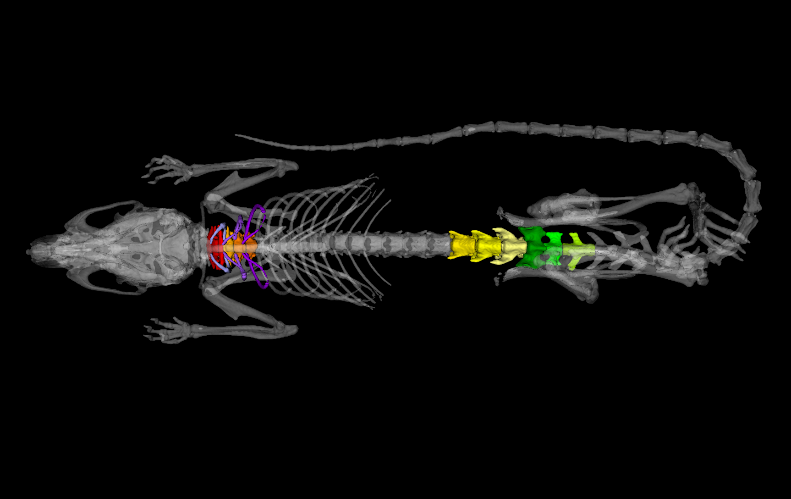

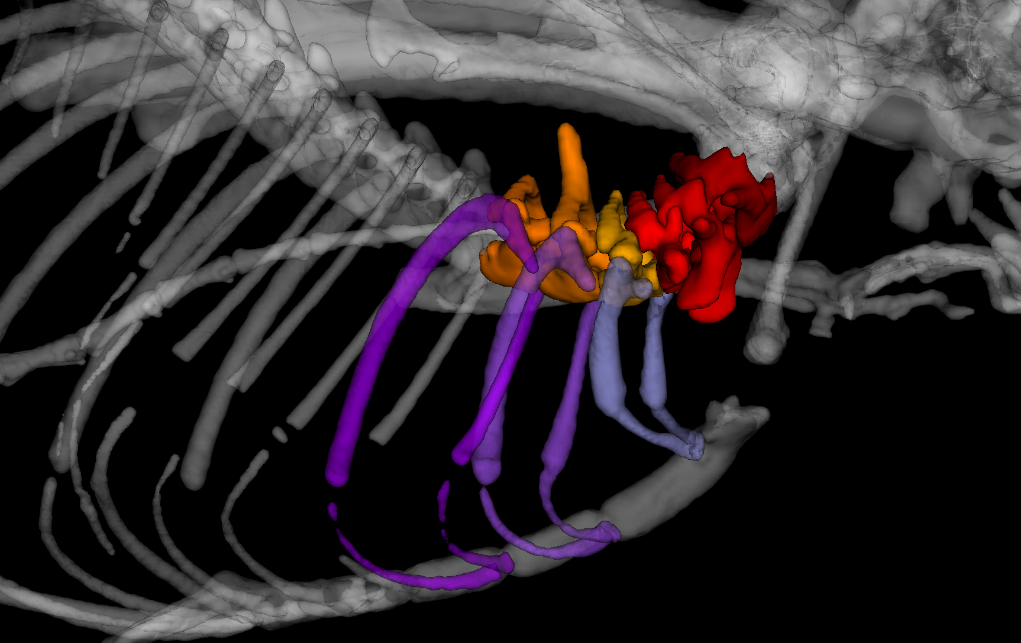

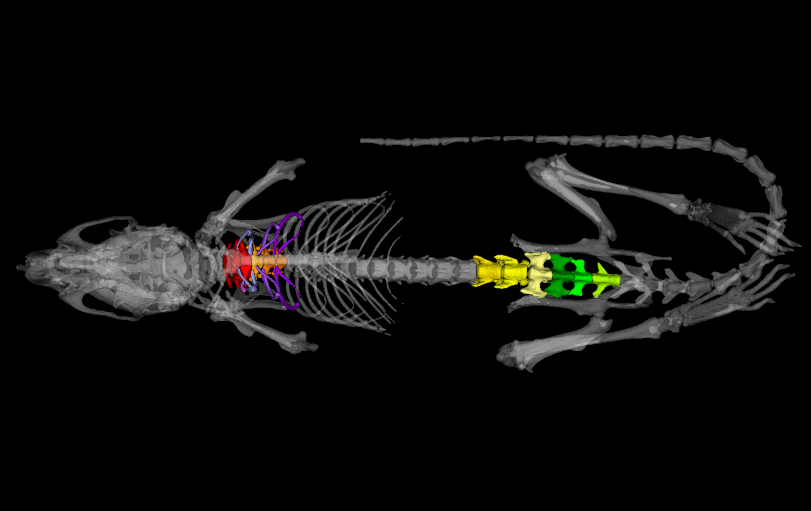

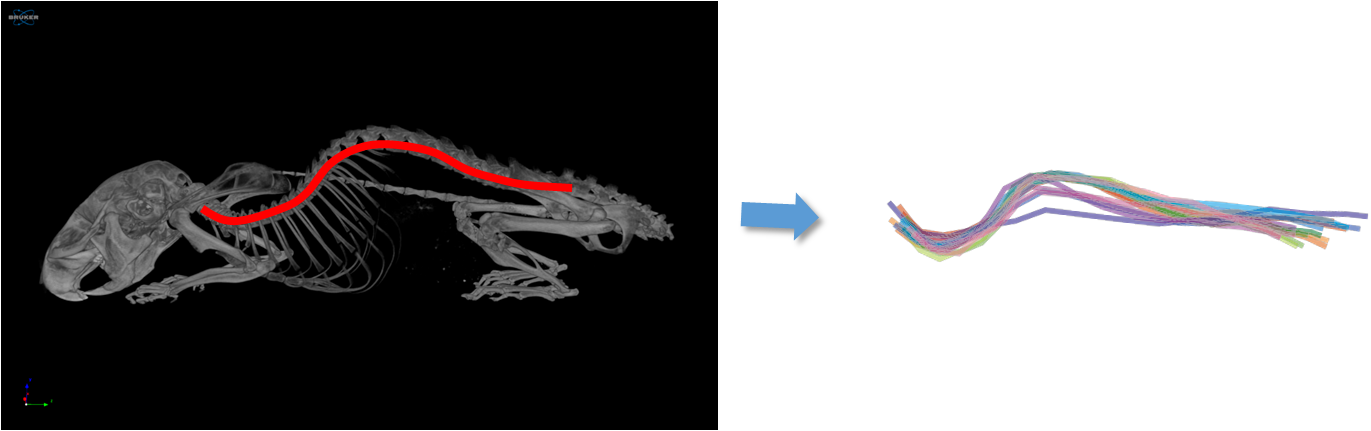

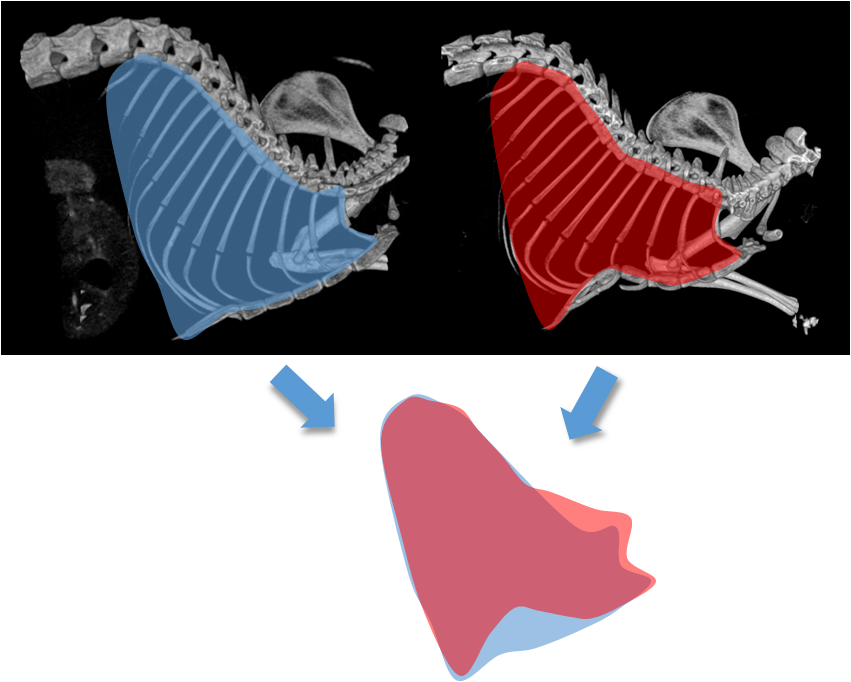

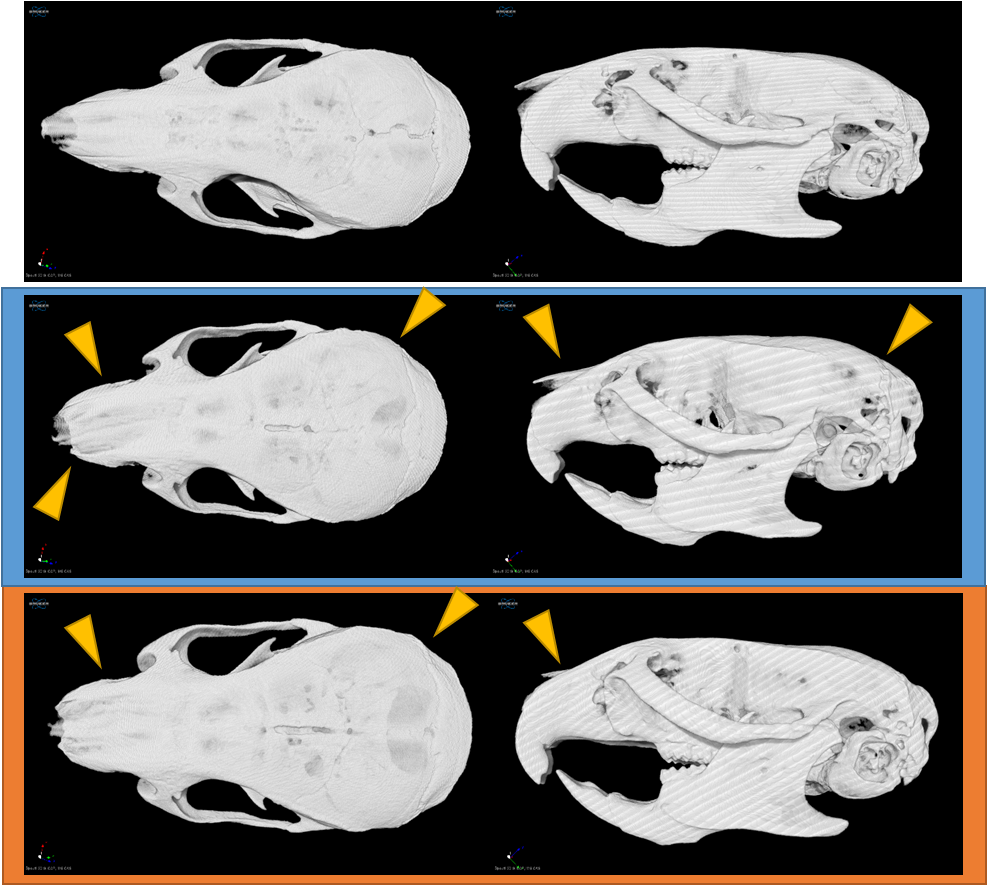

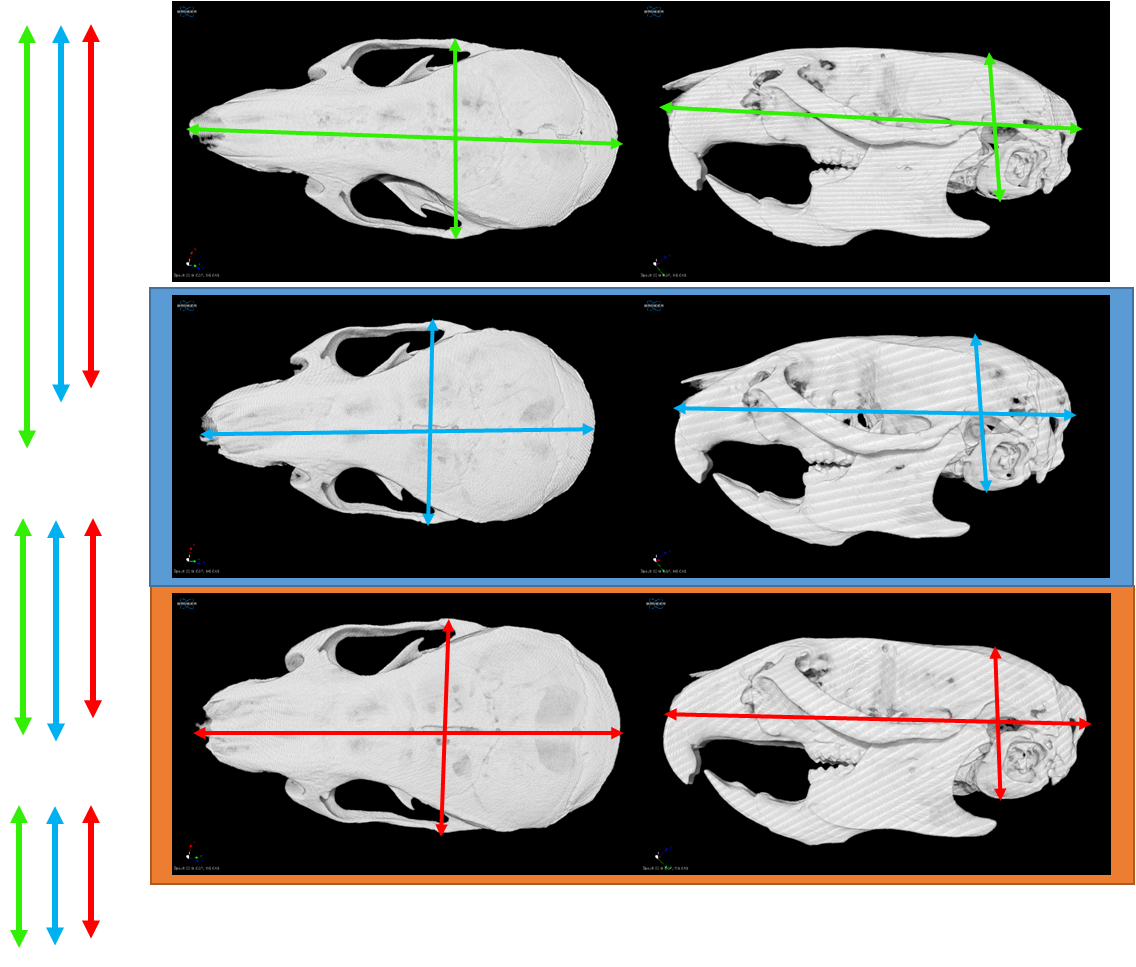

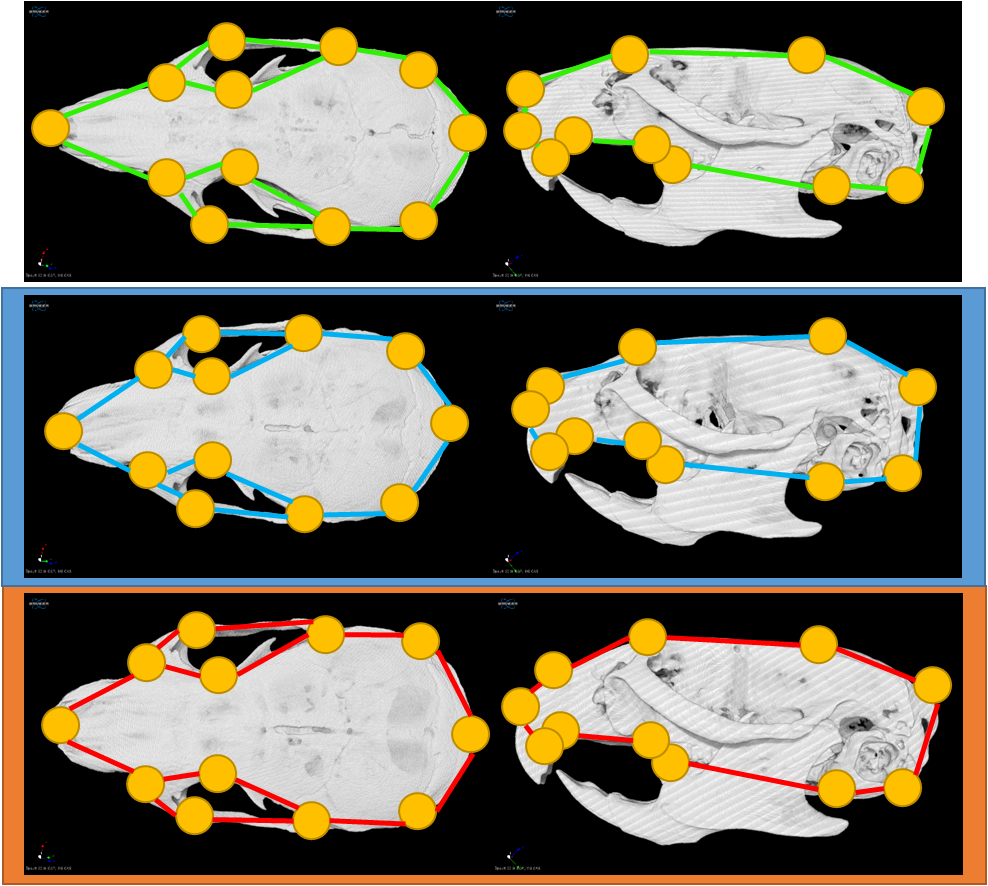

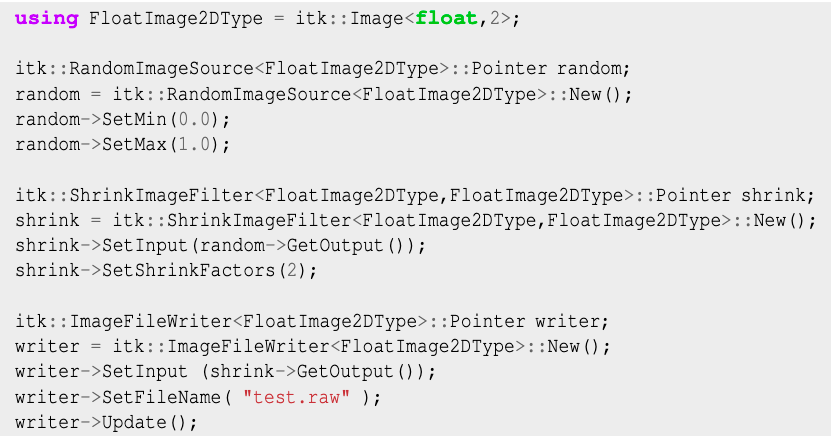

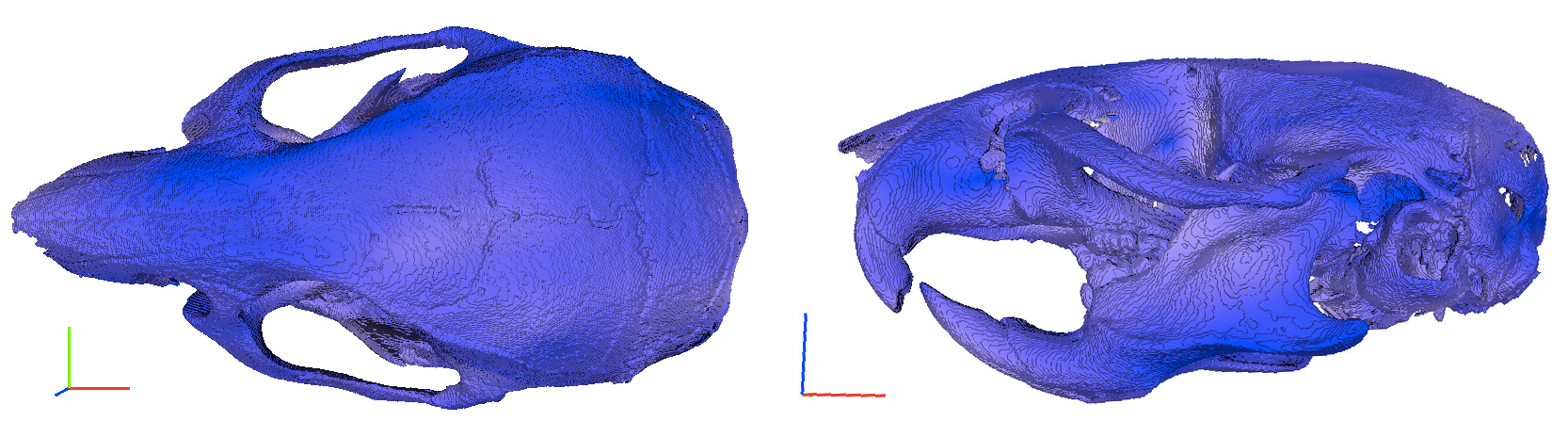

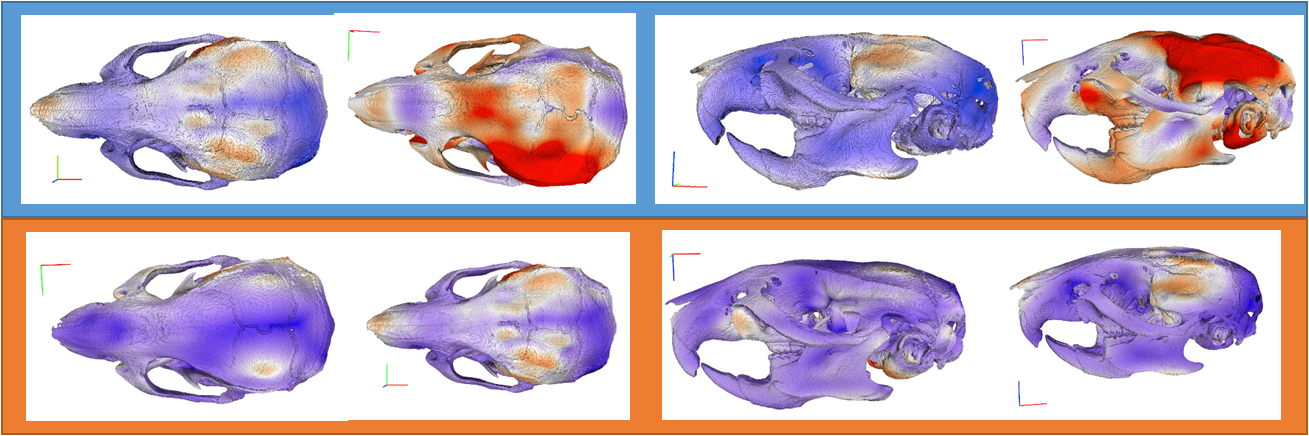

class: center, middle, inverse, title-slide # My data look like a mouse ## Pydata Prague - 24 June 2019 ### Karla Fejfarová ### <span class="citation">@karlafej</span> --- background-image: url("img/mouse3.png") background-size: contain ## Who am I? ### Bioinformatician at the Czech Centre for Phenogenomics Working with biologist, analyzing data, automating boring stuff -- - Renaming files, extracting log files - OCR - Excel macros - Statistical analysis, reports... -- <br /><br /> - Improve metabolomics data analysis pipeline - Automate separating cell population in cytometry data - MicroCT image segmentation - Quantify differences between skulls ??? Bioinformagician From Excel to hero renaming files, extracting log files, simple tasks... few lines in any scripting languages OCR bigger projects --- ## OK. What's the Czech Centre for Phenogenomics? - Research infrastructure - Located in Vestec, right on the border of Prague - Expertise: mouse models, mouse phenotyping - [www.phenogenomics.cz](https://www.phenogenomics.cz/) .pull-left[  ] .pull-right[  ] ??? Looks like a normal building but there are two underground floors filled with mice --- # Mouse model? .pull-left[  ] -- .pull-right[  ] --- # Mouse model? .pull-left[ ### Model train   <small> [https://www.flickr.com/photos/chrism70/](https://www.flickr.com/photos/chrism70/) [https://www.flickr.com/photos/69258414@N08/](https://www.flickr.com/photos/69258414@N08/) <small> ] --- count: false background-image: url("img/intruder1.png") background-size: contain # Mouse model? .pull-left[ ### Model train   <small> [https://www.flickr.com/photos/chrism70/](https://www.flickr.com/photos/chrism70/) [https://www.flickr.com/photos/69258414@N08/](https://www.flickr.com/photos/69258414@N08/) </small> ] .pull-right[ Human and mouse share about 95 % of genes  ] ??? 95 % of genes - if you put a human gene into mouse, it will probably work - if we have a human with a disease, we are able to make a mouse having the same problem - Mouse model mimics a disease in humans: research of mechanisms and potential treatments Develop a mouse model: - Selective breeding experiments - Genetic modifications --- # Phenotyping ## Phenotype x Genotype .pull-left[ Genes   ] .pull-right[ Features   ] ??? affect, influence --- background-image: url("img/mouse.png") background-size: contain # Phenotyping ## Why? How? Who? - By systematically switching off (knockin out) each of the rouhgly 20 000 genes we want to create a catalogue of mammalian gene function - The knock out mice then undergo standardized tests in order to infer gene function ## The International Mouse Phenotyping Consortium  - 19 research institutions - [www.mousephenotype.org](https://www.mousephenotype.org/) - all the data freely available ??? - **knock out gene** - remove the gene or switch it off so that it doesn't work anymore - **phenotyping** means doing all these tests from behavior, to blood test, you can do it both with an genetically altered mouse or with wild type Of course, it's not so simple: Genes multiple functions because: 1. some genes regulate the expression of other genes - gene regulatory networks 2. genes produce proteins that often act together in protein complexes,so that a modification in one gene can affect the action of proteins produced by other genes. 3. Genes produce proteins that are part of the same pathway (eg. metabolism of glucose) 4. Groups of genes can work together during development to establish positional information which is important for example to determine limb development, digit formation, craniofacial morphology Further research: combination of genes --- # Screens  ??? This is a plan of the phenotyping pipeline, with all the tests, you can see there are really many of them --- class: center, middle  R/Shiny ??? This is an internal web application, that can show all the data we have so far, together with basic statistical analysis. - My colleague Ashkhan made this app in R-based web framework Shiny. - **Shiny** is something like Plotly/Dash or Flask in Python. - The app **serves to the researchers**, who are not familiar with data analysis tools to select their gens or tests of interest and look at the data. --- background-image: url("img/mouse44.png") background-size: contain # Cool. What have you discovered? - Models for investigation of inherited myopia (CCP) <small>[[doi: 10.1186/s13578-019-0280-4]](https://dx.doi.org/10.1186%2Fs13578-019-0280-4)</small> - Identification of 32 genes that may play role in diabetes (HZB Munich) <small>[[doi: 10.1038/s41467-017-01995-2]](https://bit.ly/IMPCMetabolism)</small> - Better way to model Alzheimer disease in mice (JAX) <small>[[PLOS Genetics, 10.1371/journal.pgen.1008155]](https://doi.org/10.1371/journal.pgen.1008155)</small> - 67 candidate hearing loss genes <small>[[doi: 10.1038/s41467-017-00595-4]](http://bit.ly/IMPCDeafness)</small> - 347 genes with eye related phenotypes, 75% of which were not previously known to be associated with eyesight <small>[[doi: 10.1038/s42003-018-0226-0]](https://www.nature.com/articles/s42003-018-0226-0)</small> --- class: center, middle, inverse # Screens  ??? Now I'm going to quickly guide you through some tests we do, starting with neurobehavioral screens --- # Neurobehavioral screens .pull-left[ Beam Walk <video loop controls autoplay muted width="300"> <source src="img/MVI_2394.mp4" type="video/mp4"> </video> Open Field Test  ] .pull-right[ Light Dark Box  Grip Strength Test  ] ??? **Beam walk** - not only a cool video of a mous walking on a stick, but also assessment of balance and fine coordination movement. We are measuring the distance, the mouse is able to walk on the stick **Open field** - We are tracking mouse movement by a camera in an arena - mice usually don't like big open spaces and spend the majority time near the walk. Lightdark box - similar **Grip strength** - checking the strength which the mouse uses to hold the grid --- # Hearing .pull-left[ Auditory Brainstem Response  ] .pull-right[  <small>Stimulus: pure tone of 24 kHz frequency, 5 ms duration and intensities ranging between 0 - 85 dB SPL. Each curve represents an average of 600 repetitions.</small> ### Task: automate finding the threshold intensity ] ??? - Mice don't talk to us, but we are able to find out if they hear the sound by measuring electromagnetic activity of their brains - isn't it **amazing** - Like human EEG - activity of brain after a auditory stimulus - EEG electrodes - mouse in anesthesia - ABRs are recorded to clicks (10µs duration, positive transient) presented from 0-85 dB SPL in 5dB steps, presented 256 times at 42.6/sec. - ABRs are recorded to the following frequencies and levels; 6kHz (0-85dB SPL), 12kHz (0-85dB SPL), 18kHz (0-85dB SPL), 24kHz (0-85dB SPL) and 30kHz (0-85dB SPL), presented in 5dB intervals. Tone pips are 5ms in duration, with a 1ms rise/fall time, presented 256 times at 42.6/sec (optional values). Tone stimuli are presented in decreasing frequency order for a particular sound level and from low to high stimulus level. --- # Vision  <video loop controls autoplay muted width="630"> <source src="img/miles.mp4" type="video/mp4"> </video> ??? If you are seeing ophtalmologist we do the same - looking for abnormalities in mouse's eye Eye morphology Optical coherence tomography - obtain high resolution images of retina and anterior segment wiki: low-coherence light to capture micrometer-resolution, two- and three-dimensional images from within optical scattering media (e.g., biological tissue). based on low-coherence interferometry, typically employing near-infrared light. The use of relatively long wavelength light allows it to penetrate into the scattering medium. wiki: cellular organization, photoreceptor integrity, and axonal thickness in glaucoma, macular degeneration, diabetic macular edema, multiple sclerosis and other eye diseases or systemic pathologies which have ocular signs. --- # Metabolism .pull-left[  ] .pull-right[  ] - Oxygen consumption - Carbon dioxide production - Heat exchange - Food and water intake - Locomotor activity ??? - Controlled and stable environment Monitoring the animal's oxygen consumption and carbon dioxide production over the time allows us to calculate key metabolic parameters: - Respiratory exchange - Amount of energy (or calories) that an animal needs to carry out a physical function --- # Metabolism  ??? Axes X - time Y - oxygen consumption light, dark - day starts at 6 in the morning each mouse is a line: KO mice, control mice messy --- # Metabolism  ??? You can see it better on averaged data: - orange - KO - dark blue - all the controls we have - light blue - controls measured the same day - **activity in night** --- class: inverse, center, middle # Now the fun begins! --- # MicroCT Imaging .pull-left[  ### How does it work? 1. Capture a series of 2D planar X-ray images (X-ray source and detector move around the mouse between the images) 2. Reconstruct the data into 2D cross-sectional slices / 3D models ] .pull-right[  ] ??? - allows us to see inside the object - this is really cool! - The medical examination you don't do so offten on human patiens and we are doing it on mice! -- <video loop controls autoplay preload width="400"> <source src="img/dxa.mp4" type="video/mp4"> </video> ??? **soft tissues, bones** - allows to "see inside" the object - provides information about the microstructure - nondestructive Our instrument: - Continuously variable magnification with smallest pixel size of 2.8 micron, - Possibility to resolve object details of 5-6µm with more than 10% contrast, - Scanning in circular or spiral (helical) trajectory, - Full body mouse and rat scanning, 80mm scanning diameter, >300mm scanning length, - Integrated physiological monitoring (breathing, movement detection, ECG) for time-resolved 4D microtomography, - On-screen dose meter to estimate accumulated dose and dose rate --- # MicroCT Imaging ## Body composition .pull-left-40[  ] ??? propotrion of bones / lean mass / fat in the body bones: green lean: yellow fat: purple -- .pull-right-60[         ] ??? cross-section of a mouse body. - white dots are bones - green parts are the areas we are interested in - **mrs Indrova!** tedious task, many hours by the computer - we were thinking if we can somehow automate this tasks --- # MicroCT Imaging ## Body composition .pull-left[ Cell segmentation  <small> Ronneberger, Olaf; Fischer, Philipp; Brox, Thomas (2015). "U-Net: Convolutional Networks for Biomedical Image Segmentation" [[arXiv]](https://arxiv.org/abs/1505.04597) </small> ] .pull-right[ Kaggle Carvana Image Masking Challenge  <small>[https://www.kaggle.com/c/carvana-image-masking-challenge ](https://www.kaggle.com/c/carvana-image-masking-challenge) </small>] ??? When we knew the problem, we could see that many people were trying to solve similar things - from the cell segmentation in the microscopy images - to finding cars at Kaggle --- # U-net  <small> Ronneberger, Olaf; Fischer, Philipp; Brox, Thomas (2015). "U-Net: Convolutional Networks for Biomedical Image Segmentation" [[arXiv]](https://arxiv.org/abs/1505.04597) </small> ??? deep learning architecture based on fully convolutional networks - trainable with a **small data set** (original paper: 20 images) - trainable end to end - prefered for biomedical application 1. contracting path: capture context via a context feature map 2. expanding path allows precise localisation --- .pull-left[ ## Convolutional layers ] .pull-right[  ]  <small>[https://github.com/fastai/fastai/tree/master/courses/dl1/excel](https://github.com/fastai/fastai/tree/master/courses/dl1/excel)</small> ??? - this is quickly how conwolutional layer works - the filters aren't predefined - they are learned during training --- class: center, middle  ??? This is how we optimize the weights ---  - Open-source deep learning library developed by Facebook, based on Python ```python class ConvBnRelu2d(nn.Module): def __init__(self, in_channels, out_channels, kernel_size=3, padding=1, dilation=1, stride=1, groups=1, is_bn=True, is_relu=True): super(ConvBnRelu2d, self).__init__() self.conv = nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, padding=padding, stride=stride, dilation=dilation, groups=groups, bias=False) self.bn = nn.BatchNorm2d(out_channels, eps=BN_EPS) self.relu = nn.ReLU(inplace=True) if is_bn is False: self.bn = None if is_relu is False: self.relu = None def forward(self, x): x = self.conv(x) if self.relu is not None: x = self.relu(x) if self.bn is not None: x = self.bn(x) return x ``` ??? inspired by ML library Torchy (Lua) simple API --- background-image: url("img/mys.png") background-size: contain  .pull-right-40[ 98% accuracy! ] ??? Our ML approach works and Mrs Indrova is free! accuracy criterion based on Dice score - more than 98 % DSC = 2TP / (2TP + FP + FN) --- # MicroCT Imaging ## Bone morphology .pull-left[   ] .pull-right[   ] ??? Now I'd like to talk a little about our **future plans**, - we would like to do something similar for the **skeleton**. - We are looking if the shepe of the bones differ and we are trying to a automate the task --- # MicroCT Imaging ## Bone morphology .pull-left[   ] .pull-right[ ### Are these skulls different?  ] ??? We started with the skull - does the blue skull differ from the orange one? --- # MicroCT Imaging ## Bone morphology .pull-left[  ] .pull-right[  ] ??? - we can measure height and width - or we can manually set landmarks and compare resulting shape --- # MicroCT Imaging ## Bone morphology ### Or maybe a different approach? ??? what if we tried to compate the whole skull volume or surface? more image processing than data analysis... --- .pull-left[ Insight Segmentation and Registration Toolkit  Visualization toolkit  ] .pull-right[ C++  Python wrappers: - [itk](https://pypi.org/project/itk/) - [SimpleITK](https://itk.org/Wiki/SimpleITK) - [vtk](https://pypi.org/project/vtk/) ] ??? biomedical image processing --- # MicroCT Imaging ## Bone morphology .pull-left[  ] .pull-right[ <small> - Iterative closest point transform to get the skulls to the same orientation and scale </small> <small> - Symmetric forces demons image registration to calculate the deformation field </small> ]  ??? here we are comparing the particular skulls with an average normal skulls - preliminary results blue: zero change red: biggest change to wrap it up... what do we need except automation? --- background-image: url("img/mouse44.png") background-size: contain # What do we need? ## Except more money, unicorns and unlimited supply of chocolate... -- ### Pythonistas who are not afraid of biology ###... maybe you? ??? We'll be hiring more people next year, so if you are interested, let's keep in touch --- # Thank you! .pull-left-60[ Vendula Novosadová Ashkan Zareie František Malinka František Špoutil Sarah Clewell Marie Indrová Jan Procházka Radislav Sedláček  ] .pull-right-40[   ] ??? - Ashkhan, who made the Shiny app - František Špoutil, the CT imaging guru - Maries Indrova, who anotated all the sections. --- background-image: url("img/mouse11.png") background-size: contain